What is Web Scraping?

Web scraping is a powerful method used to extract data from websites. This technique involves the use of software or a web scraper, which automatically navigates a website, retrieves the necessary information and extracts it for later use. Often, this data is transformed into a structured database or spreadsheet for further analysis. The importance of web scraping lies in its ability to quickly collect a large amount of data. In a world where data is a valuable source of knowledge and power, web scraping allows businesses and individuals to retrieve relevant information from web pages without having to enter it manually. This process can be applied for a variety of purposes, from competitive intelligence to data collection for academic research. The effectiveness of web scraping depends largely on the quality of the scraper used. A good web scraping tool can navigate and extract data from any website, often bypassing measures that prevent or limit automatic access to website data. For example, software like Octoparse makes it possible to automate this process and to do web scraping more effectively, by transforming web data into structured and easily usable information.

Why use web scraping software?

Using web scraping software has several significant advantages. First of all, it saves time. Scraping data manually is a tedious and time-consuming process. An automated web scraper can complete this task in a fraction of the time, allowing users to focus on analyzing data rather than collecting it. In addition, web scraping software guarantees accurate and efficient data collection. Unlike manual data collection, which is subject to human error, a web scraper can extract data with great precision, thus ensuring the reliability of the data collected. This is especially crucial in areas where accurate data is critical, such as in market research or financial analysis. Another major advantage is the ability to access a wide variety of data sources. Web scraping software can retrieve information from multiple websites, including those that are not easily accessible. This allows businesses and researchers to have a more complete picture of the information landscape they are studying, by extracting data from various websites and compiling it into a single, structured database. Additionally, web scraping tools are particularly useful for tracking changes on websites over time. For example, as part of competitive intelligence, a web scraper can monitor price or product changes on competitor sites, providing up-to-date data that is relevant to business strategies. Finally, web scraping is essential for organizations that need to collect data on a large scale. Businesses that rely on data collection for their analyses, such as market trends, consumer preferences, or sentiment analyses, will find web scrapers an indispensable tool for extracting data efficiently and systematically.

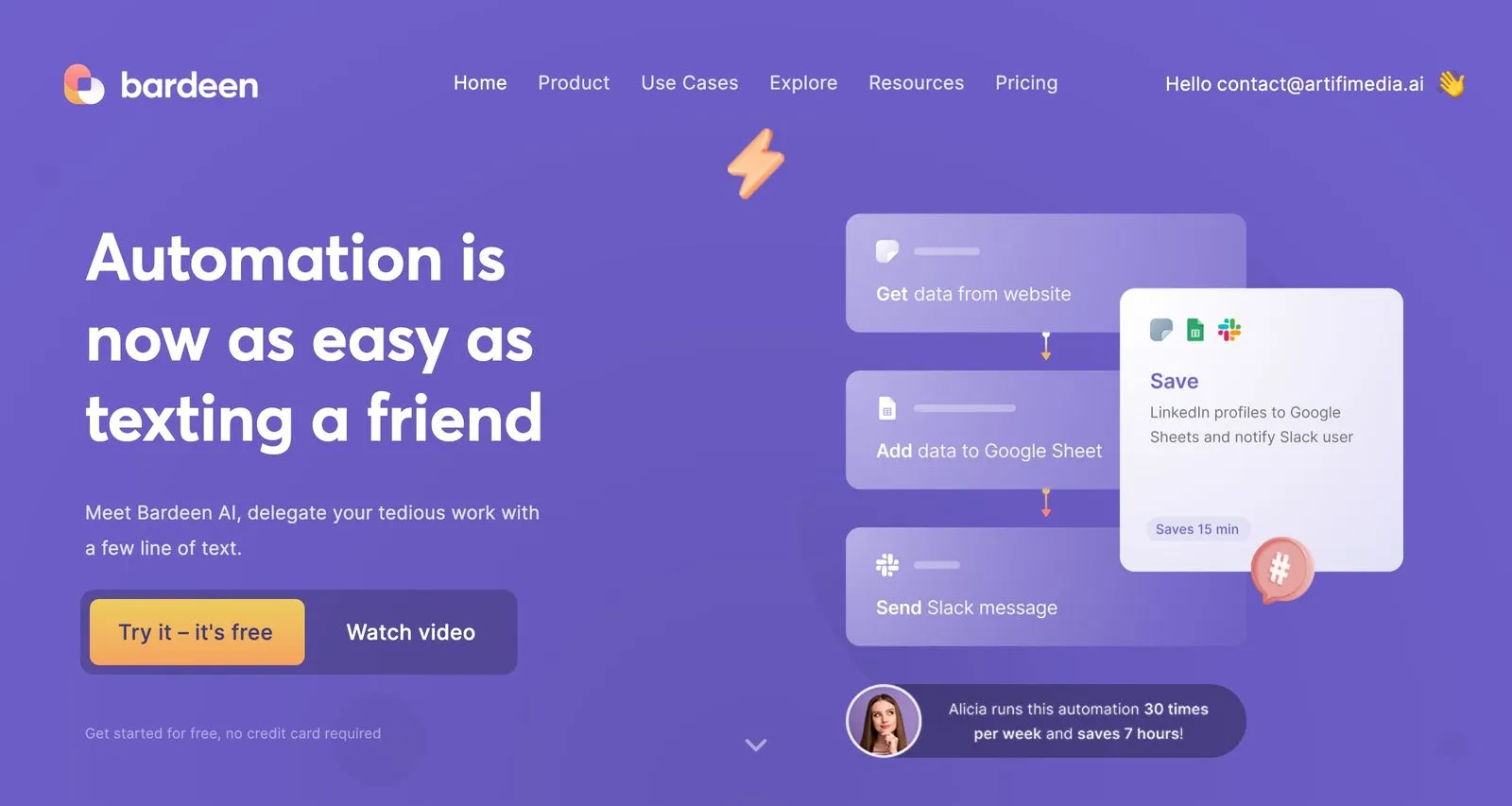

1. Bardeen

Bardeen is presented as a powerful automation solution for your repetitive tasks, especially in the field of web scraping. Using artificial intelligence, Bardeen is turning automation into a task as easy as sending a message. This software offers the ability to create automated workflows in a few lines of text, adapting the automations to your specific needs. Its approach avoids manual copy and paste and makes it easy to extract data from various websites. Bardeen is distinguished by its diversified functionalities, improving productivity and being compatible with many popular applications. Available in several pricing plans, including a free version, it is ideal for startups, businesses, and online retailers.

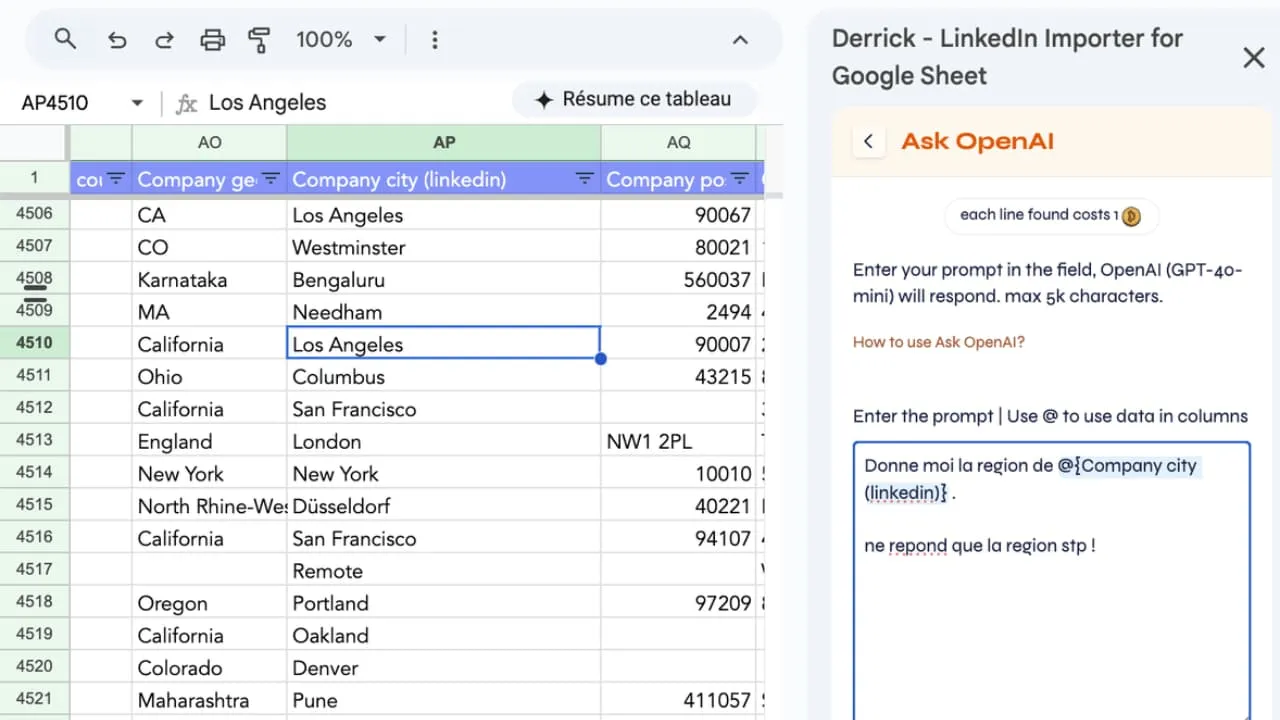

2. Derrick

Use Derrick to enrich your data and generate personalized content in Google SheetsDerrick is an all-in-one AI scraping, enrichment, and automation tool, directly connected to Google Sheets. Here is a simple example that illustrates the power of the tool in prospecting or content generation workflows using AI.

Start by retrieving your data in a spreadsheet: a list of leads, companies or contacts, in one click. Derrick offers advanced enrichment features, allowing you to automatically obtain more than 100 points of information on each line: name, first name, email, telephone, position, website, website, estimated traffic, LinkedIn profile, etc.

Once this data is in place, you can use the AskOpenAI or AskClaude function to generate personalized content at scale.

Example of a prompt, very easy to use: You are an expert in cold email. You're talking to {{firstname}}, which is {{job}}. He's having trouble with {{painpoint}}. Your service solves this problem with [your solution]. Write an icebreaker in 1 short and powerful sentence. Derrick automatically replaces the variables ({{firstname}}}, {{job}}, {{painpoint}}) with the specific values of each line and generates a unique message for each contact.

All without leaving Google Sheets.

This type of workflow makes it possible to transform raw data into contextualized content at scale, in minutes. A considerable asset for growth, sales, recruitment teams or anyone who wants to industrialize the use of AI in a simple and concrete way.

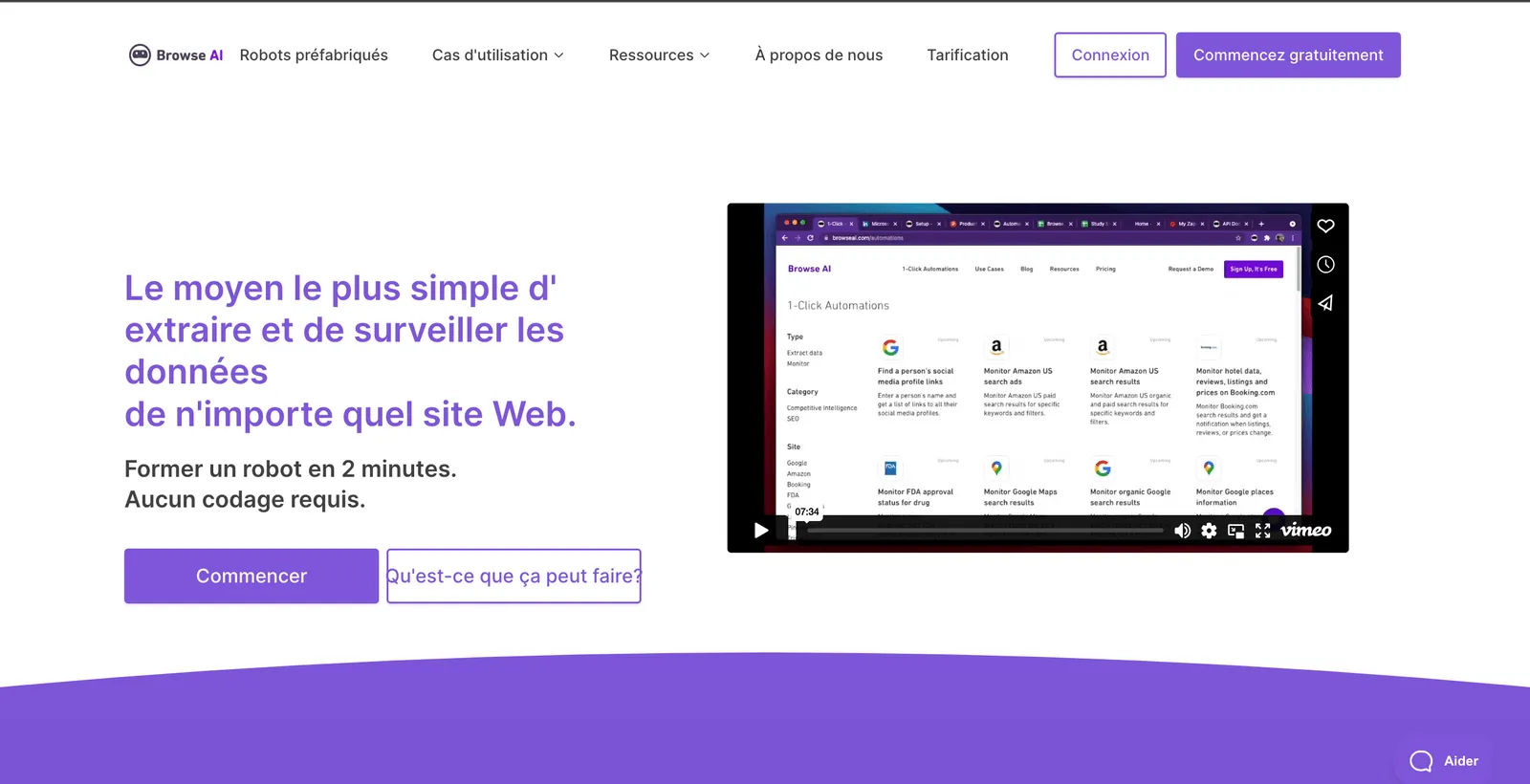

2. Browse AI

Browse AI is a platform designed to simplify the extraction and monitoring of web data. It allows you to train a robot in just two minutes, without requiring coding skills. Browse AI excels at extracting specific data from any website, converting it into a spreadsheet that is automatically filled in. The platform also offers a monitoring function, allowing you to be notified of changes on monitored sites. With pre-built robots for common use cases, Browse AI is particularly suited to businesses looking for an efficient and user-friendly solution.

3. MrScraper

MrScraper is a visual web scraping tool, designed to extract data from websites easily and without blocking. With an intuitive interface, it automates the painstaking scraping process. Using real browsers and proxy rotation, MrScraper minimizes blocks, while offering features like built-in scheduling and an API for easy integration. Its code-free construction capabilities make data extraction accessible to everyone, from individuals to startups and businesses. MrScraper is an ideal solution for those looking for a flexible and effective approach to web scraping.

4. Kadoa

Kadoa is an innovative solution that facilitates the extraction of web data using artificial intelligence. Instead of creating custom tools for each data source, Kadoa offers a fast and accurate method adapted to site changes. It transforms and ensures the accuracy of the extracted data, making it available via a powerful API. Its ability to automatically generate scrapers and its intelligent crawling make Kadoa particularly suitable for businesses that need a reliable and scalable data extraction solution.

5. WebScrape AI

WebScrape AI is designed to facilitate online data collection without requiring coding skills. This intuitive and economical tool adapts to the varied needs of businesses. It allows for easy customization of data collection preferences, ensuring accurate and reliable information. WebScrape AI automates data collection, saving valuable time. The user only has to enter the URL and the elements to be extracted, the tool takes care of the rest. Using advanced algorithms, it ensures accurate and reliable data collection. WebScrape AI is therefore an ideal solution for companies looking to automate their data collection while ensuring accuracy and efficiency.

The criteria for choosing the best web scraping software

When selecting a web scraping software, several factors need to be taken into account. It is crucial to assess the usability of the software, especially for users without advanced technical skills. The functionalities offered, such as task automation, the quality of the extracted data, and the ability to navigate complex websites, are also important. A good customer support and regular software updates are essential to ensure a smooth and efficient user experience.

How do I do web scraping legally?

Web scraping can raise legal issues, especially when it comes to privacy and copyright issues. It is vital to understand the laws applicable in your region and to comply with the Terms of Use of the targeted websites. The ethical use of web scraping also involves avoiding overloading site servers and respecting personal data.

The benefits of an easy to use web scraper

One Web Scraper easy to use makes thedata extraction accessible to a wider audience. This allows users without programming skills to benefit from the benefits of web scraping, such as quick access to relevant data and the ability to follow market trends in real time.

Free vs paid web scrapers: What's the difference?

Free web scrapers are often sufficient for simple and one-off tasks. However, for more complex and regular needs, the paid versions offer more advanced features, better reliability, and superior customer support. The choice will depend on the specific needs and budget of the user.

How do you collect data effectively with a web browser?

Web scraping browser extensions can greatly simplify data collection. They allow users to easily retrieve information from web pages without requiring additional software. These extensions are often user-friendly and can be a great starting point for those who are new to the world of web scraping.

The impact of web scraping on business data management

Web scraping has a considerable impact on data management in businesses. It allows for fast and effective data collection, which is essential for informed decision-making, market analysis, and competitive intelligence. The extracted data can help identify emerging trends, optimize marketing strategies, and improve customer experiences.

Future trends in web scraping

The future of web scraping is moving towards a greater integration of artificial intelligence and machine learning, allowing for more targeted and contextual data collection. Real-time scraping is also becoming increasingly important, offering instant and up-to-date data. These developments promise to make web scraping even more powerful and adaptable to the changing needs of businesses and individuals.

Summary of key points

- Understanding Web Scraping: It is an essential tool for extracting data from websites.

- Software Choices: Depends on ease of use, features, customer support, and specific needs.

- Legality and Ethics: Important to respect the laws and conditions of use of the sites.

- Benefits of Easy-to-Use Scrapers: Make scraping accessible to everyone.

- Free vs Paid: The paid versions offer more features and reliability for complex tasks.

- Using Browser Extensions: Simplifies data collection for beginners.

- Impact on Businesses: Improves decision making and market analysis.

- Future trends: AI and real-time scraping will play an increasing role.